Varnish Cache example ssl offloading

About

NOTE: Article written back in 2016. Still relevant today.

This article explains how Varnish is can be used to cache HTTP requests and speed up a website.

The main peculiarities of this example are:

- Using Varnish version 4 – www.varnish-cache.org

- Configuring Varnish as proxy cache.

- Assuming SSL offloading happens elsewhere – https (port 443) hits Apache (or Nginx or an AWS Load Balancer...) then it forwards traffic to Varnish.

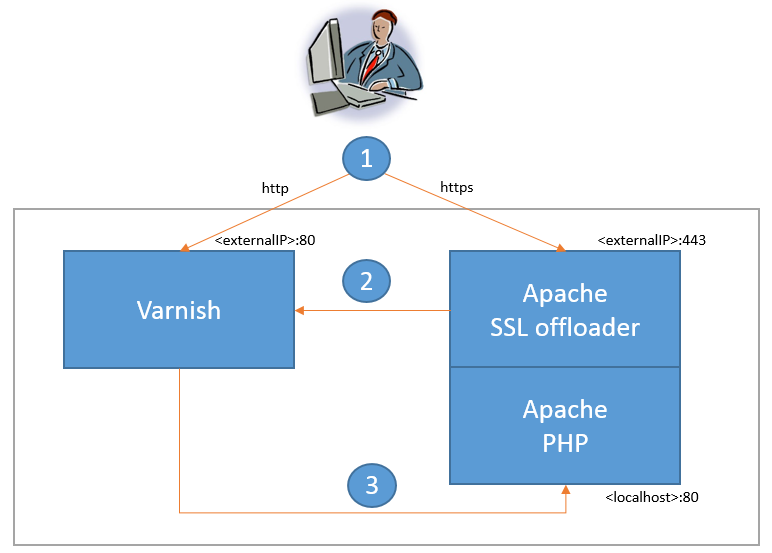

Consider this diagram:

User requests are either http:// (port 80) or https:// (port 443).

http:// just redirects to https://, using Varnish in this example for simplicity.

https:// goes to an SSL off loader, in this case Apache for simplicity.

Apache forwards the requests to Varnish in http:// (Varnish doesn’t support SSL) who will cache the responses.

A header is added by Apache so Varnish knows the http:// request is originally https://.

Same Apache server will them receive the http:// misses from Varnish, it will be a different virtual host listening in 127.0.0.1 port 80.

Varnish installation

Install Varnish from repository, so it is easier to keep up-to-date.

Once installed Varnish it should listen on ports *:6081 and localhost:6082 by default and use in-memory cache as storage backend.

In my example I just want to change the listening port from *:6081 to my externalIP:80, example for Debian edit:

1. Create your own config file:

sudo copy /etc/varnish/default.vcl /etc/varnish/myconfig.vcl

2. Edit Systemd config for Varnish service:

/lib/systemd/system/varnish.service

[Service] ... ExecStart=/usr/sbin/varnishd -j unix,user=vcache -F -a 94.23.158.48:80 -a [2001:41d0:8:8f89::1]:80 \ -T localhost:6082 -f /etc/varnish/myconfig.vcl -S /etc/varnish/secret -s malloc,256m ...

3. Replace the IPs with your relevant ones and then run:

sudo systemctl daemon-reload sudo service varnish restart

Notes:

- To force Varnish to listen in a particular IP (not all) we use the -a paramenter (we can use it multiple times as shown in the example with both the IPv4 and the IPv6 addresses). I have also specified a custom .vcl file with -f parameter, there is where all the magic happens.

- The part malloc,256m means Varnish will save cached content in RAM using up to 256 MBytes (so a restart will clear it all). Read about the kind of storage backend that is right for you: https://www.varnish-cache.org/docs/trunk/users-guide/storage-backends.html

- In Ubuntu this config would be in “/etc/default/varnish” and the file will have a different format but it is very similar, keep in mind you just want to change the listening IP and port plus the path to your new .vcl file.

Varnish VCL configuration

Once Varnish is running and listening in the right ports you need to configure two basic things:

- The backend (or backends), this is where Varnish sends the traffic for things it has not in the cache (misses).

- How to cache (if different from its defaults).

Basic configuration

/etc/varnish/myconfig.vcl

vcl 4.0;

# Default backend definition. Set this to point to your content server.

backend default {

.host = "127.0.0.1";

.port = "8080";

}

Without changing anything Varnish will try to grab the requests to cache from localhost port 8080, which in our case we need to change to port 80.

By default Varnish will cache very little as it follows strict standards about caching to ensure it doesn’t break anything, so depending on your application it might cache some content but consider the following for example:

- Any request with a Cookie header will NOT be cached by default.

- Any request with any Auth header will NOT be cached by default, (logged in users).

At this point you should see if your website is working correctly and that the response headers contain some Varnish headers, for example:

Via:1.1 varnish-v4 Age: 0

Custom configuration

The power of Varnish relies on the fact that you can customize every stage of the process where Varnish decides what to cache and how to cache.

In simple terms Varnish will read a request, check if it is in the cache, if it is in cache (hit) the response is delivered directly, if it is not in cache (miss) Varnish will request it to the Apache/PHP server (backend), store it and deliver it, this its workflow diagram in detail: varnish_flow_4_0.png

This is what Varnish does with this simple custom config:

- Removes the “Cookie” and “Set-Cookie” headers in requests and responses for images and static files, this is because:

- Varnish by default will not give you a cached result if your request has a Cookie, as this means you might be authenticated and accessing private content, it will not cache responses that have the Set-Cookie. Problem is WordPress, Google Analytics will set a Cookie header for your site, stopping Varnish from being able to cache anything really.

- Avoid Varnish from trying to cache big files.

- Caching big files has not speed benefit as these are limited by bandwidth and they just use the cache memory.

- Redirects http to https.

- My site is https only and is more efficient to redirect directly from Varnish.

/etc/varnish/myconfig.vcl (full)

vcl 4.0;

# Default backend definition. Set this to point to your content server.

backend default {

.host = "127.0.0.1";

.port = "80";

}

sub vcl_recv {

# Redirecting to https.

if (req.http.host ~ "^(?i)[wwww\.]?abadcer\.com$" && req.http.X-Forwarded-Proto != "https") {

set req.http.x-redir = "https://" + req.http.host + req.url;

return(synth(850, "Moved permanently"));

}

# Large static files are delivered directly to the end-user without

# waiting for Varnish to fully read the file first.

# Varnish 4 fully supports Streaming, so set do_stream in vcl_backend_response()

if (req.url ~ "^[^?]*\.(mp[34]|iso|rar|tar|tgz|gz|wav|zip|bz2|xz|7z|avi|mov|ogm|mpe?g|mk[av]|webm)(\?.*)?$") {

return (pipe);

}

# Removing Cookie from static requests regardless of query string.

if (req.url ~ "(?i)\.(?:css|gif|ico|jpeg|jpg|js|json|png|swf|woff)(?:\?.*)?$") {

unset req.http.cookie;

}

}

sub vcl_backend_response {

# Removing Set-Cookie from static responses regardless of query string.

if (bereq.url ~ "(?i)\.(?:css|gif|ico|jpeg|jpg|js|json|png|swf|woff)(?:\?.*)?$") {

unset beresp.http.set-cookie;

}

}

sub vcl_synth {

if (resp.status == 850) {

set resp.http.Location = req.http.x-redir;

set resp.status = 302;

return (deliver);

}

}

Of course you have to check if the following customizations apply to your case. This works well for me

Other things you can do with Varnish

- Exclude certain URLs from caching completely:

# under vcl_recv()

if (req.url ~ "^/(owncloud|wp-admin)/") {

return (pipe);

}

- Force everything to be cached.

- Careful with this, it will ignore any cache headers and it will even cache errors (unless you avoid it as per example):

# under vcl_backend_response()

if (beresp.status < 400 ) {

set beresp.ttl = 30m;

return(deliver);

}

- Compressing some content if backends haven’t done already:

# under vcl_backend_response()

if (beresp.http.content-type ~ "(text|javascript|json|plain|xml)") {

set beresp.do_gzip = true;

}

- Remove the “Vary: User-Agent” from responses.

- This is to improve the hit ratio as many backend applications will send this header in responses. If you don’t have URLs that return different content based on User-Agent, you should do this otherwise each browser will need its own cache copy:

# under vcl_backend_response()

if (beresp.http.Vary ~ "User-Agent") {

set beresp.http.Vary = regsuball(beresp.http.Vary, ",? *User-Agent *", "");

set beresp.http.Vary = regsub(beresp.http.Vary, "^, *", "");

if (beresp.http.Vary == "") {

unset beresp.http.Vary;

}

}

Other examples and documentation

- Varnish Examples

- VCL Language

- VCL Built in subrutines

Key things you should know

- Varnish always runs its default builtin VCLIf you add some code in your custom .vcl, for example under “vcl_backend_response”, Varnish runs your code and then it continues running the default code for that function.

- If you don’t want that just add some “return()”, this is how you change state in the Varnish workflow, for example from state “vcl_recv” you can change to state “vcl_pass” with “return(pass)”.

- A request that has been served by Varnish will have extra headers.

- If Age = 0 it means it was a response that was requested to the backend (miss).

- if Age > 0 it means it is a cached version and the number is the age of the content in seconds (hit).

Via: 1.1 varnish Age: 47

- You can have multiple backends and use it to distribute traffic (load balancer).

Varnish administration and troubleshooting

- Use varnishlog in the server to see in detail (all headers) how a request is received, delivered or fetched from the backend.

- Example see all misses for a particular URL:

sudo varnishlog -q 'ReqURL ~ "^/Scripts/jquery.js" and RespHeader:Age == 0'

- Use varnishtop to see most common occurrences.

- Example see most common backend requests from Varnish:

varnishtop -i BereqURL

- Use varnishadm to change some configs or perform administration tasks.

- Example check backend server health:

sudo varnishadm debug.health

- Use varnishadm to clear cache

- Example clear all requests under URL /wp-content/

sudo varnishadm "ban req.url ~ /wp-content/.*" sudo varnishadm ban.list

- Use varnishstat to see real-time activity, misses, hits, requests, bytes, etc…

sudo varnishstat

- Restart Varnish:

sudo service varnish restart

Apache Configuration

Little to no change is necessary to any website application when putting Varnish in front. In this case, due to the SSL offloading done in Apache, our website needs to know that the http:// requests received from Varnish were actually https://

When you do SSL offloading we need to add a header between Apache and Varnish, after you have passed from https:// to http:// in the requests flow, to do this in Apache just add this to your config in the VirtualHost that does the SSL decryption:

RequestHeader set X-Forwarded-Proto "https"

If you see the config above, Varnish will not redirect to https:// if this header exists, because the user’s request was already https://

In WordPress you need to something similar too, edit your wp-config.php and add this:

if ($_SERVER['HTTP_X_FORWARDED_PROTO'] == 'https') $_SERVER['HTTPS']='on';

Conclusion

Varmish is an HTTP accelerator. Basic function is to cache responses which are grabbed automatically from the backend. It delivers content from its cache faster than anything, including Nginx, and makes your website significantly faster reducing the load on the process, it just needs RAM to store the cache.

Unless you do complicated coding within the VCL it uses very little CPU. You will run out of bandwidth faster than CPU and the RAM it needs is limited to your configuration. With JMeter I got a server delivering 5000 requests per second, using the full 1gbps link, while using 25% of its CPU (4 cores CPU).